Associative Memories, and Computing with Content Addressable Memory (CAM)

A core part of today’s computing systems are built around memory, specifically data stored perfectly in Random Access Memories (RAM) such as SRAM or DRAM, or longer term in flash drives or hard drives. Yet there is no exactly analogous capability found in biological information processing systems (brains). Instead, “memories” are much more fuzzy, and they don’t allow for easy data fetching (random access) nor perfect recall. Instead, memories are often triggered: an image, a song, a smell, or a taste can kick-off a chain of associated remembrances. The word “associative memory” is used instead in the fields of cognitive science and psychology. While not allowing for random access, these types of memory are still remarkable in many ways: you can feed in a partial, noisy or distorted input (part of a song, perhaps in a new key) and you can recall the original version and even other memories associated with them (where you were, and who you were with, when you first heard this song). Many researchers have strived to develop and understand mathematical models for how such associative memories could work, such as the development of Hopfield networks, holographic memories, correlograms, etc. A key question is: why didn’t biology evolve perfect memories, like RAM? Could there be energetic and information processing advantages to building computing systems around associative memories rather than RAMs? For certain types of computations, we think the answer is yes.

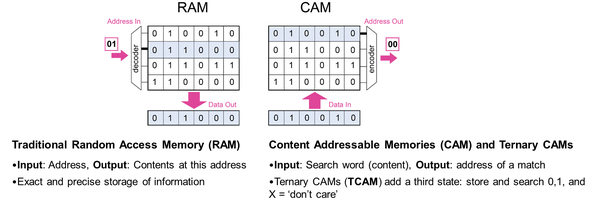

Within electrical engineering and information technology, a type of associative memory has been developed called a Content Addressable Memory (CAM). CAM circuits allow input data to be rapidly searched for any match within the memory. If a match is found, the resulting location is output. This acts almost exactly the opposite to a RAM, where the input is an address and the output is the contents.

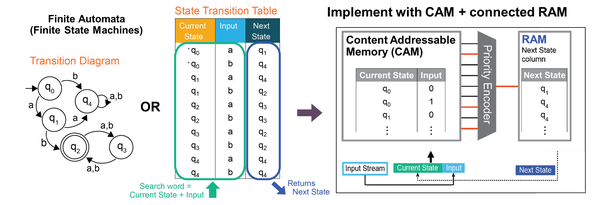

Using such circuit CAMs as part of a computing system rather than a memory system, my team has explored applications that could benefit. This turns out to include important areas in machine learning, security, genomics, and scientific computing. An important example is the broad area of Finite Automata (FA), which are used for regular expression matching with critical applications in the fields of security and genomics. FA are state machines with a set of character inputs, states, and state-transition rules (see below). These can be equivalently encoded into a table called a state transition table. Looking up your current state and current input string within this table then instructs you on which state to go to next, and the procedure is repeated until hitting acceptance or rejection states. The lookup operations in traditional hardware can be very costly and slow, and mapping this instead to a CAM can speed things up tremendously.

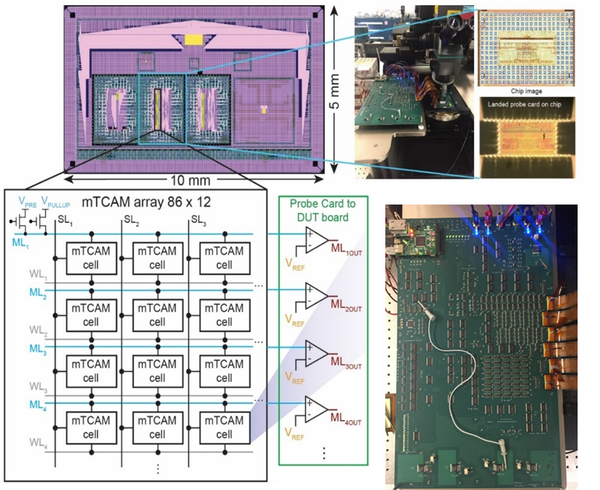

Our team has used this insight to build prototype chips to accelerate regular expression matching in the lab. One of the essential ingredients is to replace traditional SRAM-based CAM circuits with new approaches that use the non-volatility and flexibility of memristive devices (“mTCAM cell” below).

We have used these prototype chips, combined with larger system designs and simulations, to forecast significant speedups and lower power for security and genomics over state-of-the-art hardware available today.

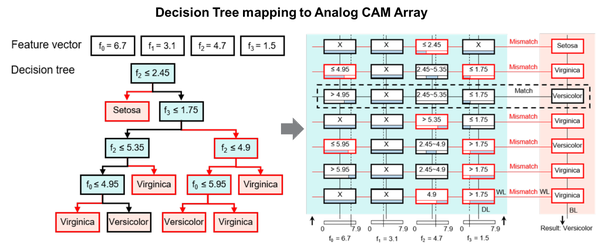

We have taken steps to get closer to biological associative memories by leveraging the analog/continuous-valued properties of memristors in new CAM circuits. This has allowed the invention of an “analog CAM” with the ability to encode “fuzzy” ranges and even search with incomplete information. We see many opportunities for this core associative memory block in neuromorphic computing. And we have already found at least one “killer application” in the area of tree-based machine learning models (Decision Trees, Random Forests, etc). In this case, the root-to-leaf paths of the tree are directly mapped to the analog CAM array:

Our analysis shows that deploying many state-of-the-art tree models to a new analog CAM based architecture allows for blazingly fast inference at low energy (>100x faster and lower energy per decision). This is exciting because tree-based models are extremely popular by data scientists, require smaller data-sets to train, and rival deep learning networks in final accuracy. Another virtue is the resulting model offers increased interpretability and explainability over deep learning. We think this application area is just the tip of the ice-berg and are enthusiastically exploring other neuromorphic areas for the use of associative memory blocks.

Further reading:

- Analog content-addressable memories with memristors

- Tree-based machine learning performed in-memory with memristive analog CAM

- In-Memory Computing with Memristor Content Addressable Memories for Pattern Matching

- Memristor TCAMs Accelerate Regular Expression Matching for Network Intrusion Detection

- Regular Expression Matching with Memristor TCAMs for Network Security