ENERGY-EFFICIENCY FOR HPC MADE IN GERMANY

The growing demand for computing, particularly for AI, drives the expansion of supercomputers in number and size. The resulting increase in energy consumption (e.g. approx. 17 WM for JUPITER at JSC) challenges HPC centres, especially in Germany.

Researchers at JSC have joined forces with experts from other major German HPC centres to examine which innovations are needed to meet sustainability and energy efficiency requirements driven by high energy costs, national policy, and commitment to environmental sustainability. They were able to find many measures to lead HPC into an energy-efficient future (https://doi.org/10.3389/fhpcp.2025.1520207).

Involved in the review report are: Deutsches Klimarechenzentrum (DKRZ), Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), High-Performance Computing Centre Stuttgart (HLRS), Jülich Supercomputing Centre (JSC), Karlsruhe Institute of Technology (KIT), Leibniz Supercomputing Centre (LRZ), Max Planck Computing and Data Facility (MPCDF) and Technische Universität Dresden (TUD).

All German HPC sites mentioned above together host a total of 20 machines ranking places 18 (JETI at JSC) to 491 (HoreKa-Blue at KIT) in the Top 500 list (status November 2024), and 4 out of the 10 most energy-efficient systems in the Green 500 list (JEDI 1st place, Strohmaier et al., 2024b).

Need for Energy-Efficient HPC Solutions

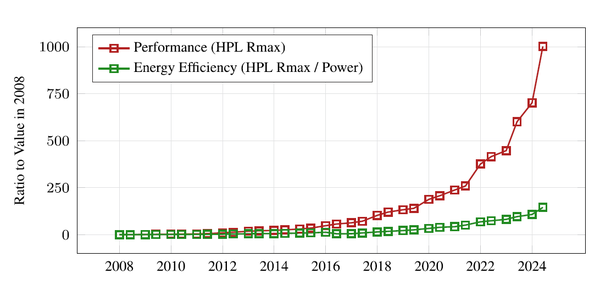

Compute demands are outpacing energy efficiency improvements (Fig. 1), making sustainability a key concern. With societal worries about environmental impact and Germany being the second-largest host of data centres globally, high energy costs (around 0.20 €/kWh, increasing by 3% annually) further emphasise the need for efficiency.

National and EU policies, such as the German Energy Efficiency Act and the European Supply Chain Directive, drive these efforts.

Effective HPC site design and operation must integrate energy efficiency measures, including improved cooling, energy reuse, monitoring infrastructures, performance-conscious programming, and optimised hardware architectures.

Measures for energy-efficient HPC systems

Improved cooling:

Advancements in microprocessors towards finer nanometer structures enhance computing power and energy efficiency per Watt but also increase power density. This makes traditional cooling inadequate and drives the demand for more effective direct liquid cooling (DLC). HPC centres are well-suited for DLC using warm water (30–40°C), eliminating the need for chillers and enabling year-round free cooling to reduce costs. Fully integrated DLC can remove over 95% of heat. Only large storage systems and specialised nodes may still rely on air cooling. While centres are transitioning to DLC, rising compute densities (in the future over 200 kW per rack) may require lower cooling temperatures, potentially reducing efficiency and increasing environmental impact.

Energy Reuse:

Traditionally, data centres were highly customised for specific HPC generations, but modern approaches favour long-term tailored buildings that optimise heat utilisation. HPC centres are shifting from a cooling-first to a heat-reuse-first approach, as heat reuse is a highly effective sustainability strategy. DLC supports efficient heat reuse, but HPC heat output often exceeds local building demands. Additionally, for optimal heat transfer to city networks or large campuses the water has to be operated at 70–90°C. To achieve this temperature large heat pumps are necessary.

Infrastructure-wise, a Modular Data Centre (MDC), like in Jülich, enables the exact alignment of system requirements and data centre provisioning without installing further, over-capacity, infrastructure.

Monitoring Infrastructures:

Monitoring the energy consumption of HPC systems is challenging, involving ingestion, processing, storage, and retrieval. Effective energy monitoring in HPC centres tracks up to 8 million metrics with 10 updates per second. Data is collected from diverse sources, including compute nodes, network infrastructure, storage, and buildings. While the monitoring process itself has minimal energy impact, insights into the jobs overcompensates any potential cost for monitoring.

Data displayed on dashboards are used by administrators and support personnel to manually monitor the system. System-wide monitoring provides a bird’s-eye view of a full system or insights down to single racks or even nodes. Job-specific analysis helps to identify inefficiencies and optimise resource utilisation. This enables the identification of measures to further improve energy efficiency.

Performance-conscious Programming:

Optimising software enhances hardware utilisation, improving both performance and energy efficiency. High-level approaches help new HPC users adopt portable and performance-conscious programming, while advanced performance engineers support optimisation efforts on the backend of programming models to adapt them to specific hardware features.

Optimised Hardware Architectures:

Processing units are the most energy-intensive components of the HPC hardware. Heterogeneous architecture designs utilising CPUs and hardware accelerators, such as JSC’s Modular Supercomputing Architecture, increase energy efficiency. While accelerators (such as GPUs) increase performance per watt for highly parallel tasks, CPUs execute code parts with limited parallelism and service tasks. Well-coordinated allocation and scheduling enhance efficient energy usage.

Storage, while only ~ 8% of the total HPC system’s power consumption, also bears potential for greater sustainability. Energy use for storage is divided into idle consumption, data access, and system-wide effects. While idle power is difficult to reduce, SSDs and semiconductor advancements lower access energy.

Power management optimises energy use by dynamically adjusting job frequencies based on workload type. Memory-bound tasks benefit from lower frequencies, while compute-bound workloads require higher frequencies. Power capping ensures operations remain within set energy limits.

🔎All findings and details can be found in the review article: https://doi.org/10.3389/fhpcp.2025.1520207

JSC participation in the SEANERGYS project

The Jülich Supercomputing Centre is significantly involved in future large third-party-funded international projects investigating innovative solutions for energy-efficient HPC systems. These include in particular the SEANERGYS (Software for Efficient and Energy-Aware Supercomputers) project, which JSC coordinates. The project will improve energy efficiency and optimise resource utilisation at the HPC/AI system level (starting Q2 2025).

More info on the SEANERGYS project page.

Contacts at JSC:

Estela Suarez

Andreas Herten