Support and Service

Support and Service is given in the following areas:

Scientific Visualization

The visualization of scientific data is a cornerstone of modern research, playing a critical role in data analysis, algorithm development, and the effective presentation of scientific results. Visualization not only helps researchers to interpret and explore complex datasets but also serves as a powerful tool for visual debugging, allowing developers to identify and address issues in algorithms with greater efficiency. Moreover, it transforms raw data into compelling visual representations, making it easier to communicate findings to a broader audience, including peers, interdisciplinary collaborators, and the general public.

One of the key challenges in this domain is the sheer size of the datasets generated by advanced simulations and experiments. At JSC, these very large datasets are stored on centralized high-performance storage systems, making it impractical to transfer them to local workstations for analysis and visualization. To address this, visualization workflows must be integrated directly into the high-performance computing (HPC) environment. This requires leveraging remote visualization techniques to enable seamless and efficient rendering of data without the need for time-consuming data transfers.

The ATML Visualization & Interactive HPC group provides comprehensive solutions to meet these challenges. Specifically, we offer:

- Software packages for data visualization on HPC systems: These tools are optimized for high-performance environments, ensuring scalability and efficiency when working with large datasets.

- Remote visualization tools: These enable users to interact with and visualize their data directly on the HPC systems, providing a smooth and responsive experience even for highly complex visualizations.

- Expertise in in-situ visualization: This cutting-edge approach integrates visualization directly into the simulation process, allowing researchers to analyze data in real-time as it is generated, thus saving storage space and reducing post-processing time.

By providing these resources and expertise, ATML Vis empowers researchers to harness the full potential of scientific visualization, enabling them to gain deeper insights, streamline their workflows, and present their findings with clarity and impact.

Virtual Reality

When scientific data exhibits complex three-dimensional structures, specialized visualization systems, such as virtual reality (VR) devices, offer significant advantages for analysis and exploration. These systems enable researchers to interact with their data in an immersive and intuitive manner, providing a deeper understanding of intricate spatial relationships and structures that might be challenging to comprehend through traditional visualization methods.

At JSC, affordable head-mounted displays (HMDs) are utilized to bring VR capabilities to researchers. These devices support stereoscopic visualization, allowing users to perceive depth and spatial relationships in their data with remarkable clarity. This immersive experience is further enhanced by the integration of tracked input devices, which enable users to interact with the virtual environment. Researchers can manipulate 3D structures directly and explore data from multiple perspectives in real time.

To power these advanced VR applications, the Unreal Engine serves as the 3D rendering backend. Known for its high-performance capabilities and exceptional visual fidelity, the Unreal Engine ensures that complex datasets are rendered smoothly and with precise detail. Its flexibility also allows for the development of customized VR solutions tailored to specific research needs.

Multimedia

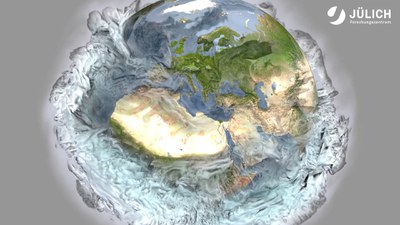

To effectively communicate scientific results and workflows to a broader audience, we also focus on the creation of high-quality multimedia productions. These productions are designed to make complex scientific concepts more accessible, engaging, and visually compelling for diverse audiences, including researchers, policymakers, and the general public.

Our multimedia workflow combines industry-standard tools and innovative approaches to produce high-grade content. For video editing, we utilize Adobe Premiere Pro in conjunction with Adobe After Effects, enabling us to craft polished video clips with seamless transitions, dynamic effects, and precise editing. These tools are particularly valuable for creating visually appealing presentations that highlight key findings or demonstrate intricate processes.

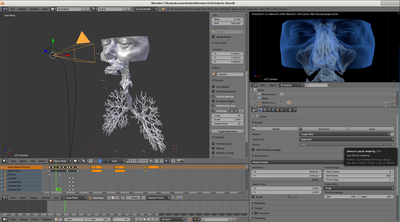

For the creation of 3D animations, we rely on the powerful open-source software Blender. This versatile tool allows us to design detailed and realistic 3D models and animations that bring scientific phenomena to life. Blender provides the flexibility and capability to handle diverse visualization needs.

This multimedia workflow serves multiple purposes:

- Explaining scientific phenomena: Through animations and video clips, we can break down complex processes into digestible and visually intuitive segments, helping audiences grasp abstract concepts more easily.

- Presenting in-house tools and workflows: By showcasing our tools and service operations through multimedia, we provide clear and engaging explanations of how these resources function, enabling users to understand and adopt them more effectively.

- Engaging broader audiences: Multimedia productions serve as a bridge between the scientific community and the public, fostering interest in science and technology while demonstrating the impact and relevance of our work.

Interactive Supercomputing

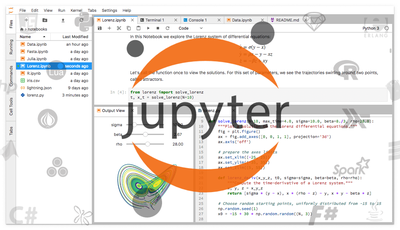

A particularly powerful tool in the context of interactive HPC is the open-source software Jupyter and its advanced interface, JupyterLab. This platform has the potential to revolutionize scientific computing by providing an environment that seamlessly integrates interactivity with reproducibility. Jupyter allows researchers to create dynamic documents that combine live code execution, narrative text, mathematical equations, visualizations, and interactive widgets. This combination supports a holistic approach to data analysis, fostering better understanding and collaboration.

On supercomputers, Jupyter and JupyterLab bring unique advantages by addressing the challenges of supporting diverse software workflows. These tools enable researchers to interact directly with HPC resources, facilitating the analysis of massive datasets without requiring data transfer to local systems. The platform's flexibility makes it possible to adapt to a wide range of scientific applications, from computational fluid dynamics to machine learning and beyond.

Key features of Jupyter and JupyterLab in the HPC context include:

- Integration of live code and visualizations: Researchers can execute code directly within the notebook environment, immediately seeing the results in the form of plots, 3D visualizations, or statistical summaries.

- Interactive controls: Widgets and sliders allow users to dynamically adjust parameters and explore different scenarios without modifying the underlying code.

- Reproducibility: By combining code, data, and results in a single document, Jupyter ensures that analyses can be easily shared and reproduced by others, enhancing scientific transparency.

- Customization and extensibility: A wide range of extensions and plugins can be incorporated to tailor the platform to specific research needs, such as integrating domain-specific libraries or enabling real-time collaboration.

Through the adoption of Jupyter-based workflows, researchers gain an intuitive and versatile tool for working with supercomputers. This approach not only enhances productivity but also bridges the gap between computational power and user accessibility, making cutting-edge HPC resources more approachable and effective for a wide range of scientific disciplines.