Learning to Learn (L2L) on HPC

Summary

"Given a family of tasks, training experience for each of these tasks and a family of performance measures, an algorithm is said to learn to learn if its performance at each task improves with experience and with the number of tasks." (S. Thrun and L. Pratt, 1998)

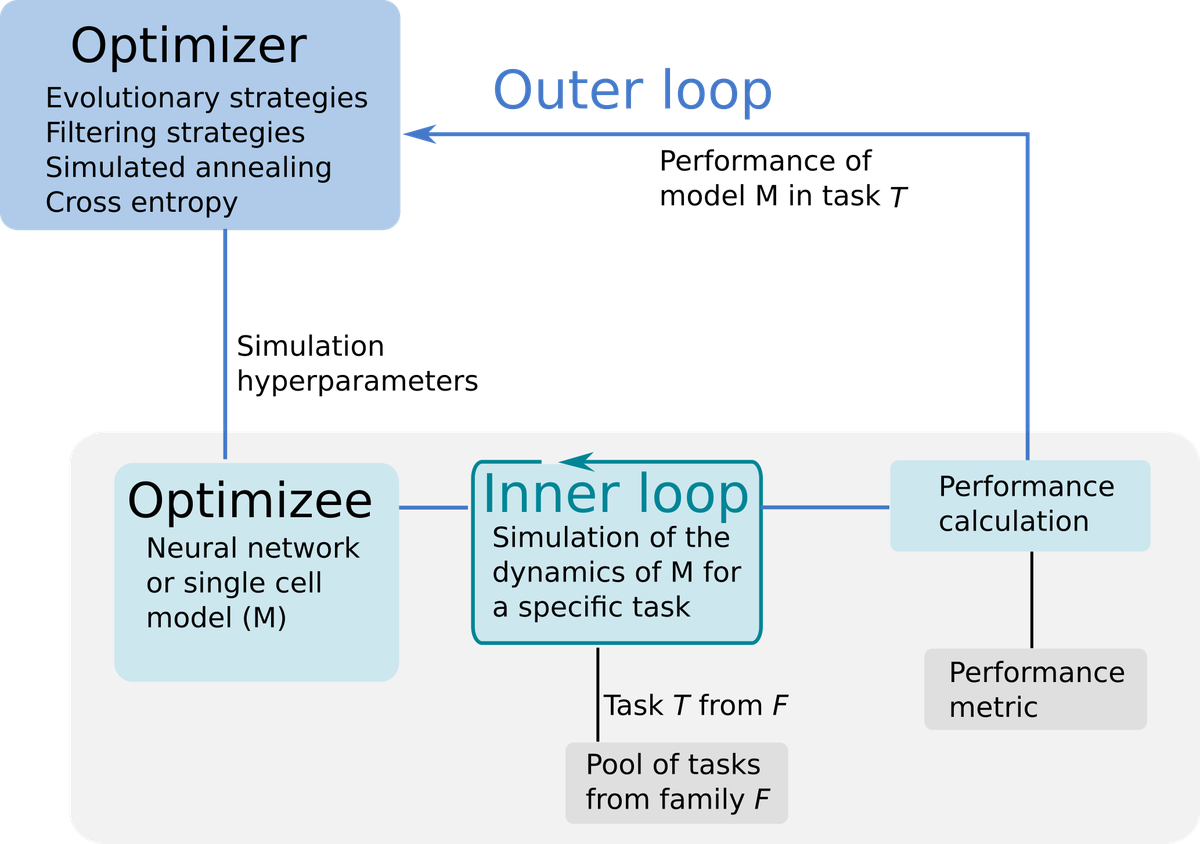

Learning to learn (L2L) is a specific method to improve learning performance. In a normal learning regime, a program is trained against samples from a single task. A well-defined performance measure is required in order to improve its performance against new samples from that same task. L2L generalizes learning using a two loop structure (see Figure 1). In the inner loop the program adapts to learn a specific task. In the outer loop the program’s general performance is improved by training against samples from a new task from a family of tasks. This is achieved by optimizing the learning of hyperparameters (such as a set of synaptic plasticity values) across the family of tasks in the outer loop as the whole system evolves over training runs.

Project description

Exploring the consequences of parameter changes on the observables in simulation or analytical software is a common practice and general challenge in all computational sciences, as a generalization of the experimental method to numerical analysis. In close collaboration different groups in and outside of the EBRAINS Research Infrastructure, we are further developing the L2L framework (https://github.com/Meta-optimization/L2L) which enables large scale parameter space explorations and optimization of simulations on HPC systems. By extending L2L with job orchestration tools for HPC, we provide a flexible platform for parameter exploration using well known optimization algorithms.

Our contribution

We implement and integrate machine learning algorithms within the L2L framekwork. The framework is able to use the batch systems of HPC systems (e.g. SLURM), especially on the Jülich Supercomputing Centre’s supercomputers. We also do benchmarking of the performance of long running optimization sessions.

Specific Examples

- Study of plasticity rules e.g. Structural Plasticity, in NEST and Arbor

- Optimization with non gradient based methods e.g. Enkf-NN

- Connectivity generation and optimization in neural circuits

- Multi-agent simulations with NEST and NetLogo

- Single cell optimizations with NEST, Arbor, Neuron and Eden

- Whole brain simulations using The Virtual Brain

Our collaboration partners

This project is being conducted in collaboration with a variety of scientific and development groups, in particular with Dr. Pier Stanislao Paolucci, Dr. Elena Pastorelli from the INFN section in Rome, Prof. Dr. Wolfgang Maass at the Graz University of Technology, Prof. Thomas Nowotny, Dr. James Knight, and Dr. Johanna Senk from the University of Sussex and Dr. Anand Subramoney from the Royal Holloway University of London. This code was first implemented during the Human Brain Project at the Graz University of Technology and continues to be developed by the SDL Neuroscience in the context of the EBRAINS RI.

References

[1] S. Thrun, L. Pratt (eds) Learning to Learn. Springer, Boston, MA, 1998

[2] Yegenoglu, A., Subramoney, A., Hater, T., Jimenez-Romero, C., Klijn, W., Pérez Martín, A., ... & Diaz-Pier, S. (2022). Exploring parameter and hyper-parameter spaces of neuroscience models on high performance computers with learning to learn. Frontiers in computational neuroscience, 16, 885207.

[3] Jimenez Romero, C., Yegenoglu, A., Pérez Martín, A., Diaz-Pier, S., & Morrison, A. (2024). Emergent communication enhances foraging behavior in evolved swarms controlled by spiking neural networks. Swarm Intelligence, 18(1), 1-29.

[4] S. Diaz-Pier, C. Nowke, and A. Peyser. Efficiently navigating through structural connectivity parameter spaces for multiscale whole brain simulations. In 1st Human Brain Project Student Conference, page 86, 8-10 Feb. 2017.