Project SunGAN

Distributed GANs for synthetic Solar images

In the Project "Distributed GANs for synthetic Solar images" we train Generative Adversarial Networks (GANs) that create realistic images of the sun in extreme ultraviolet.

The Solar Dynamic Observatory (SDO) takes fascinating, beautiful images of the sun, and allows scientists to generate deep insights into dynamic processes within the sun. Imaging generates excellent qualitative insights, but a quantitative understanding is very difficult, as the space of all possible images forms a very complex distribution. With generative models, it is possible to study and understand this distribution in image space.

In this project our team develops machine learning models that can create realistic high resolution solar images. By exploring the latent space of the model we can systematically study how the model's hidden variables control qualitative and quantitative feature outputs.

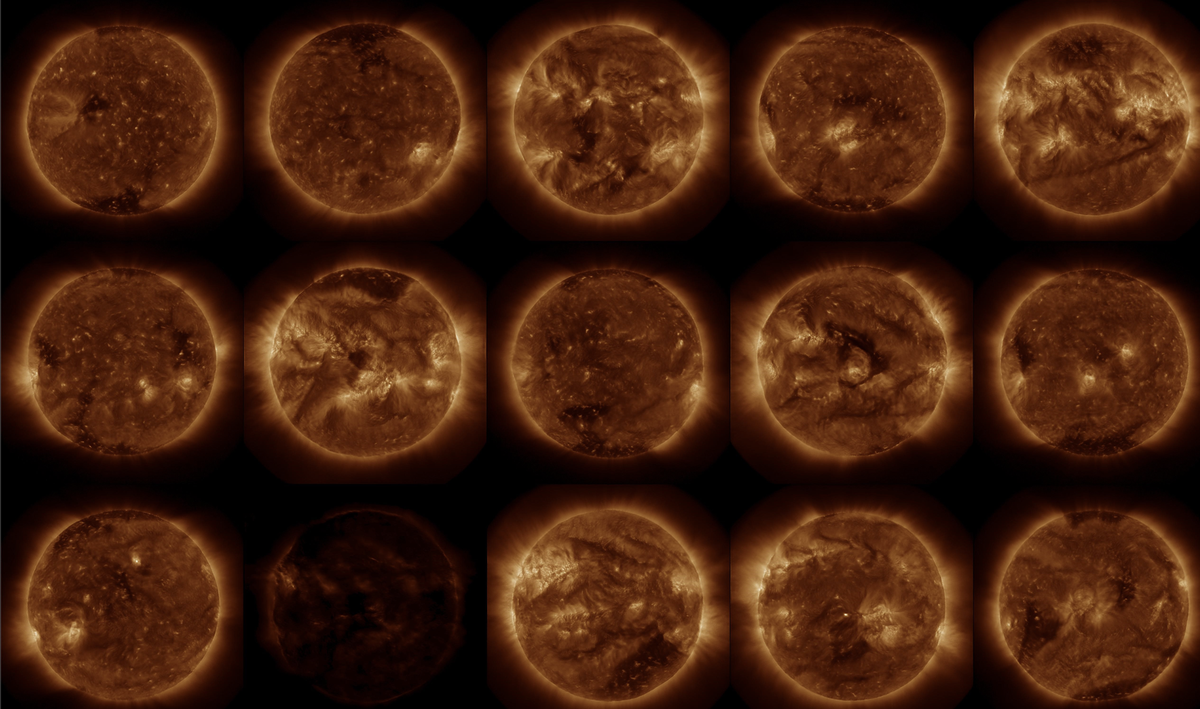

We combine different state-of-the art methods to obtain high quality images with multiple consistent channels. We study different GAN architectures (StyleGAN [1], StyleGAN2 [2], StyleALAE [3], BIGGAN [4], Unet BIGGAN [5]) and combine these methods with differentiable data augmentation to increase the data-efficiency [6,7]. We train models highly parallel on many nodes of the JUWELS Cluster at the Jülich Supercomputing Centre. In the image is depicted a sample of solar images generated by one of our models that reflects images taken by the SDO at a wavelength of 193 Angstrom.

Collaborators: Ruggero Vasile, Frederic Effenberger (GFZ Potsdam)

- Tero Karras, Samuli Laine, Timo Aila, “A Style-Based Generator Architecture for Generative Adversarial Networks”, https://arxiv.org/abs/1812.04948

- Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, Timo Aila, “Analyzing and improving the image quality of StyleGAN”, https://arxiv.org/abs/1912.04958

- Stanislav Pidhorskyi, Donald Adjeroh, Gianfranco Doretto, “Adversarial Latent Autoencoders”, https://arxiv.org/abs/2004.04467

- Andrew Brock, Jeff Donahue, Karen Simonyan, “Large Scale GAN Training for High Fidelity Natural Image Synthesis”, https://arxiv.org/abs/1809.11096v2

- Edgar Schonfeld, Bernt Schiele, Bernt and Anna Khoreva, “A u-net based discriminator for generative adversarial networks”, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8207--8216 (2020).

- Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, Timo Aila, “Training Generative Adversarial Networks with Limited Data”, https://arxiv.org/abs/2006.06676

- Shengyu Zhao, Zhijian Liu, Ji Lin, Jun-Yan Zhu, Song Han, “Differentiable Augmentation for Data-Efficient GAN Training”, NeurIPS 2020.